In BI, semantic layers, and data products, the hardest part of “ask questions in natural language” is not making the model more fluent—it’s making the whole system

controllable, explainable, reusable, and governable.

Datafor AI Agent is not built on the idea of “let a large model directly generate SQL/MDX/QueryModel in one shot.”

Instead, it engineering-izes AI capability into three stable building blocks:

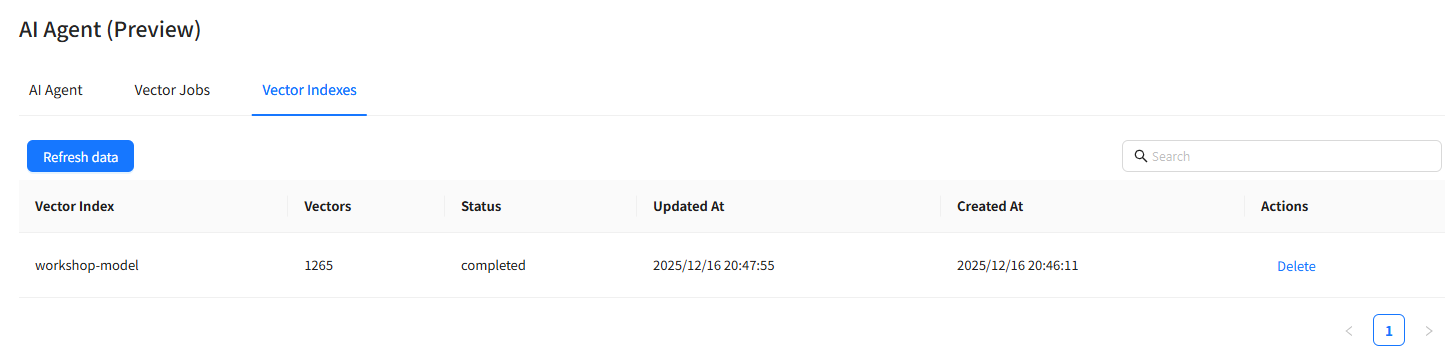

- Vector Database: semantic search and context retrieval (so the Agent can find the right things)

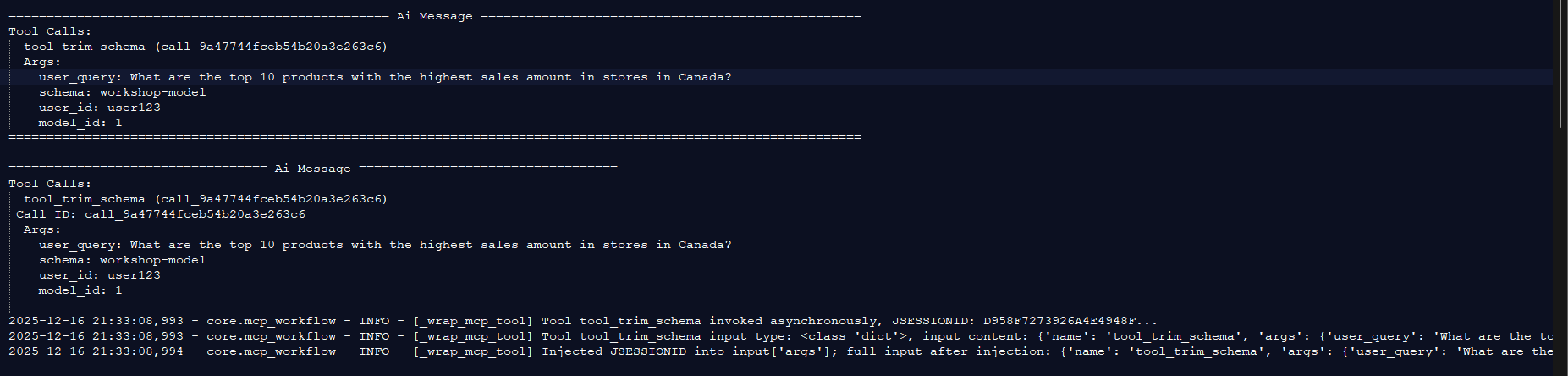

- MCP (Model Context Protocol): standardized tool calling and orchestration (so the Agent can invoke tools reliably)

- A set of clearly bounded tools: a controllable pipeline that connects “understand the model → plan → retrieve → prune → query” (so the Agent can do it correctly)

1) Why an AI Agent can’t rely on “one prompt + one generation” in BI

BI questions often involve multiple challenges at the same time:

- Ambiguous semantics: e.g., what exactly do “East Region,” “key accounts,” or “this month” mean in your definitions?

- Complex models: multiple cubes, hierarchies, calculated measures, and permission constraints

- Explainability requirements: you need not only the result, but also which metrics and filters were used

- Governance requirements: permissions, auditability, performance, cost control, and observability are unavoidable

So the more reliable approach is: decompose + orchestrate + validate.

2) The three pillars of Datafor AI Agent

A. Vector database: help the Agent “find it”

Here the vector database solves a simple problem:

When a user says “East region,” “best-sellers,” or “top customers,” how can the system quickly map that to the right

model objects and candidate values?

Typical indexed content includes:

- semantic layer metadata (dimensions, levels, measures, calculated measures, business descriptions)

- text-based dimension values (city names, product names, customer names, etc.)

- business terms / synonyms / definitions / FAQs (optional)

B. MCP: help the Agent “call tools”

MCP turns “model capability” into “engineering capability” by:

- describing tools in a standard way: inputs, outputs, calling constraints

- enabling composable orchestration: multi-tool chaining, retries, fallbacks

- making tools replaceable and extensible: the workflow isn’t hard-coded into the prompt

In short: the vector DB handles retrieval, MCP handles orchestration and calling standards,

and the tools ensure the workflow is done correctly.

C. Tools: help the Agent “do it right”

In Datafor AI Agent, tools are designed with clear responsibilities and boundaries—each tool solves one class of problems:

- Model metadata tool: fetch the “ground truth” semantic layer—available cubes, dimensions, levels, measures, calculated measures, etc.

- Query execution tool: run the final query (SQL/MDX/QueryModel, etc.) and return results

- Model analysis tool: structural reasoning such as “which cube to use,” “which fields apply,” “is the path feasible”

- Field value mapping tool: map user phrases (region/product/customer) to candidate members/values in the model

- RAG query planning tool: turn “question + retrieved context + model capabilities” into an executable plan

- RAG search tool: retrieve the most relevant definitions, synonyms, field descriptions, and business rules from the vector DB

- Model pruning tool: remove irrelevant model elements for the current question to shrink the search space and context length

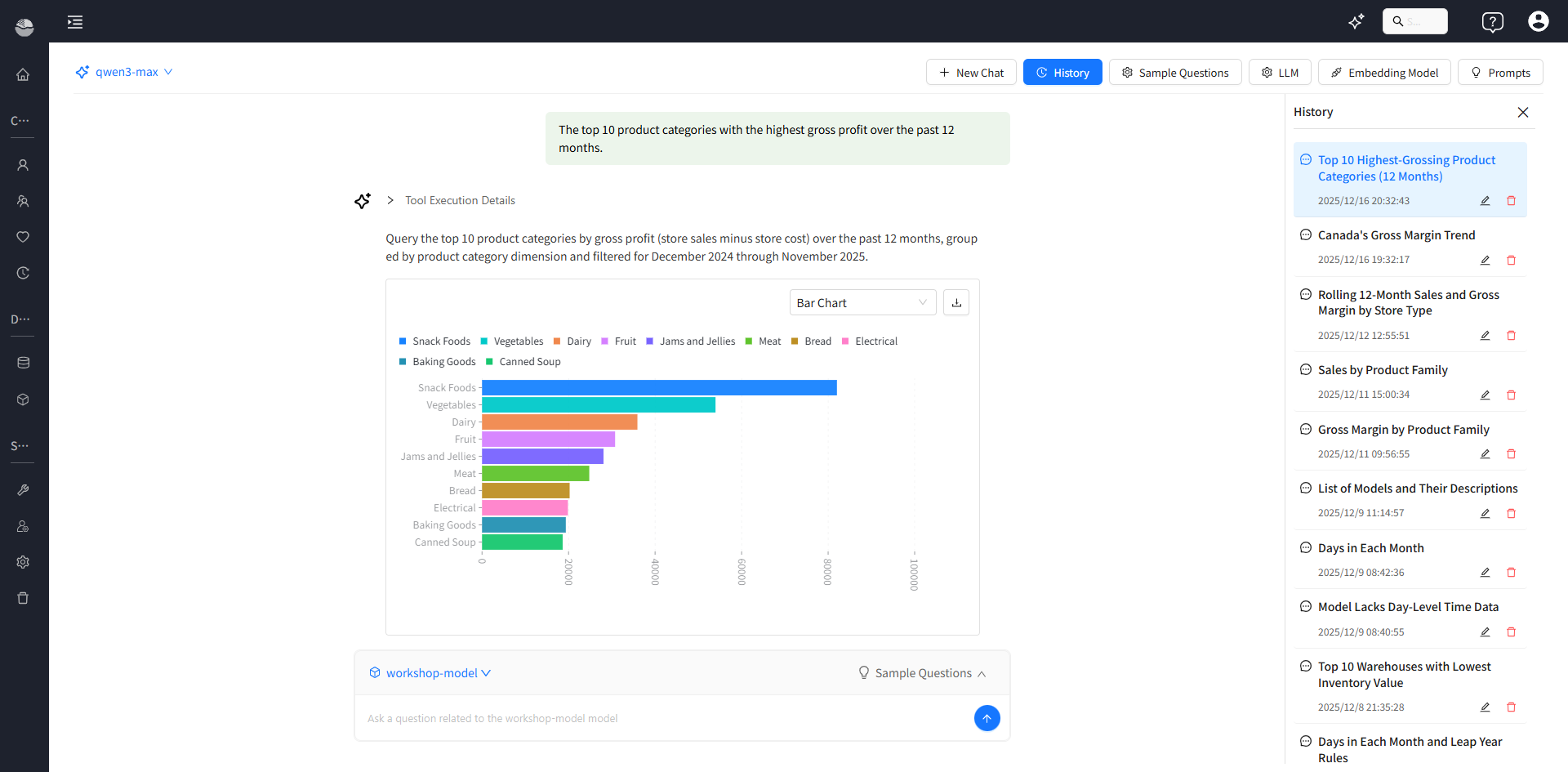

3) Putting it together: what happens during a typical question?

Example question:

“The top 10 product categories with the highest gross profit over the past 12 months.”

A controlled pipeline usually looks like:

- Fetch and understand the model (metadata + model analysis)

- Retrieve context (RAG search) and ground key terms into model objects (value mapping)

- Narrow the scope (model pruning)

- Generate the query plan (RAG planning)

- Execute and return results (query execution)

The key principle is: AI handles understanding and planning; the system handles execution and governance.

4) Benefits of this architecture

- More stable accuracy: fewer hallucinated fields or wrong metrics

- Controlled cost: pruning + retrieval reduces context size and call overhead

- Explainable and auditable: each step can output structured evidence

- Easy to extend: adding a new tool upgrades the whole capability

- Fits embedded analytics: permissions, tenant isolation, and auditing can be enforced at the API layer

Closing

Vector databases solve “find it,” MCP solves “invoke it,” and the toolchain solves “do it right.”

Combined, these three enable Datafor AI Agent to deliver a stable, explainable, and governable natural-language analytics experience—even with complex semantic models and real-world business definitions.

#Datafor #AIAgent #RAG #MCP #VectorDatabase #EmbeddedAnalytics #SemanticLayer #BI